Introduction

Introduction

Fingym environments are either static or dynamic.

Static

Fingym comes with some static environments out of the box so that you can get started without the need to set up or download anything else. These environments are a good way to backtest and train algorithms. The data is static and covers about 10 years of daily ohlcv data. For example, the dataframe of the SPY daily env.

timestamp open high low close volume

0 12/23/2008 87.53 87.9300 85.8000 86.16 221772560

1 12/24/2008 86.45 86.8700 86.0000 86.66 62142416

2 12/26/2008 87.24 87.3000 86.5000 87.16 74775808

3 12/29/2008 87.24 87.3300 85.6000 86.91 128419184

4 12/30/2008 87.51 89.0500 86.8766 88.97 168555680

... ... ... ... ... ... ...

2764 12/17/2019 319.92 320.2500 319.4800 319.57 61131769

2765 12/18/2019 320.00 320.2500 319.5300 319.59 48199955

2766 12/19/2019 319.80 320.9800 319.5246 320.90 85388424

2767 12/20/2019 320.46 321.9742 319.3873 320.73 149338215

2768 12/23/2019 321.59 321.6500 321.0600 321.22 53007110

The following environments are static:

SPY-Daily-v0

SPY-Daily-Random-Walk

TSLA-Daily-v0

TSLA-Daily-Random-Walk

GOOGL-Daily-v0

GOOGL-Daily-Random-Walk

CGC-Daily-v0

CGC-Daily-Random-Walk

CRON-Daily-v0

CRON-Daily-Random-Walk

BA-Daily-v0

BA-Daily-Random-Walk

AMZN-Daily-v0

AMZN-Daily-Random-Walk

AMD-Daily-v0

AMD-Daily-Random-Walk

ABBV-Daily-v0

ABBV-Daily-Random-Walk

AAPL-Daily-v0

AAPL-Daily-Random-Walk

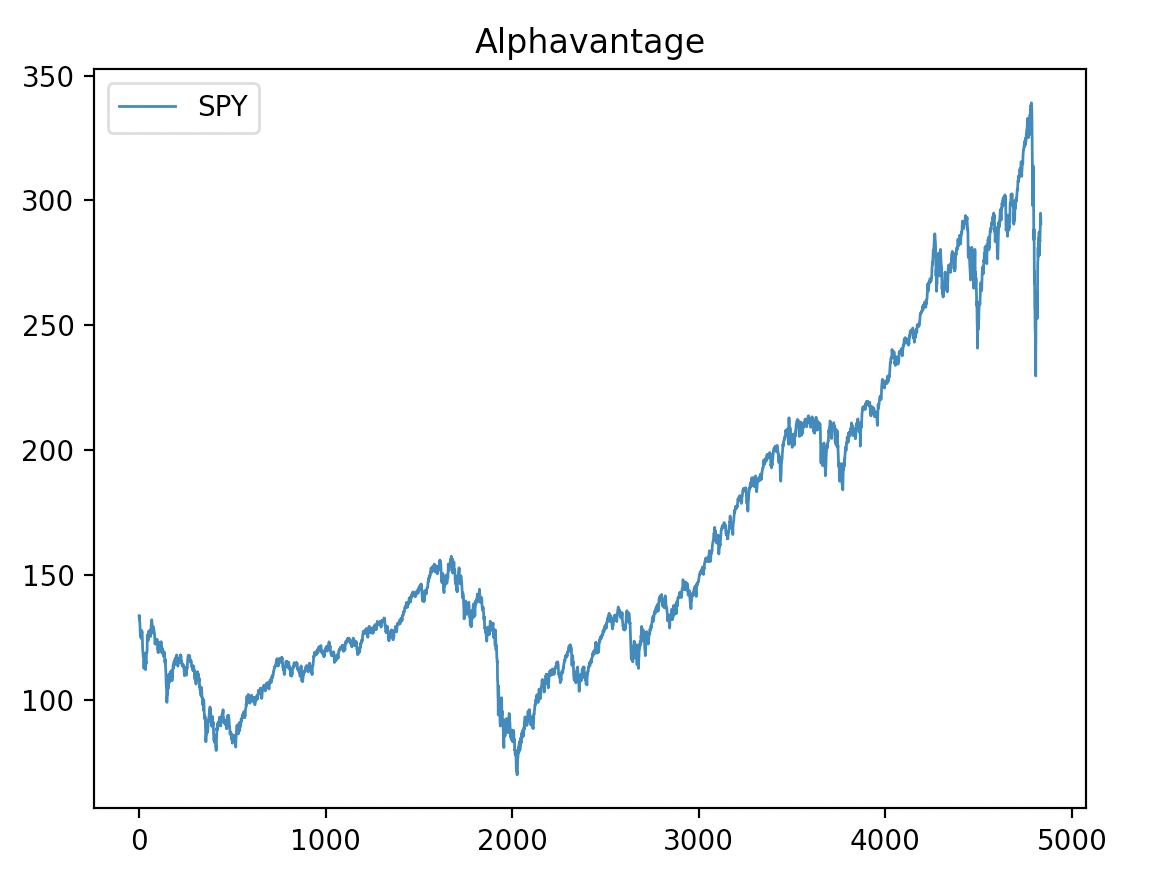

Dynamic

Fingym also supports dynamic environments that support retrieving data from multiple popular platforms such as alphavantage and iexcloud. You can fetch the latest data and train or test your algorithms with the latest data.

These environments also cache the data in order to reduce the amount of calls that are made to these platforms. This can help with the api limits imposed by alphavantage and iexcloud for free accounts.

The following environments are dynamic:

Alphavantage-Daily-v0

Alphavantage-Daily-Random-Walk

IEXCloud-Daily-v0

Alphavantage

You can get a free alphavantage key from https://www.alphavantage.co.

Install the following alphavantage library:

pip3 install alpha-vantage

This code creates an Alphavantage-Daily-v0 environment, which gets the latest daily data of the stock symbol provided.

Run examples/environments/using_alphavantage_env.py

env = make('Alphavantage-Daily-v0',stock_symbol='SPY', alphavantage_key = <enter_your_key_here>)

close = np.zeros(env.n_step)

obs = env.reset()

close[0] = obs[3]

while True:

obs, reward, done, info = env.step([1,1000])

close[env.cur_step] = obs[4]

if done:

print('total reward: {}'.format(info['cur_val']))

break

time = np.linspace(1, len(close), len(close))

plt.plot(time, close, label = 'SPY',linewidth=1.0)

plt.title('Alphavantage')

plt.legend(loc = 'upper left')

plt.show()

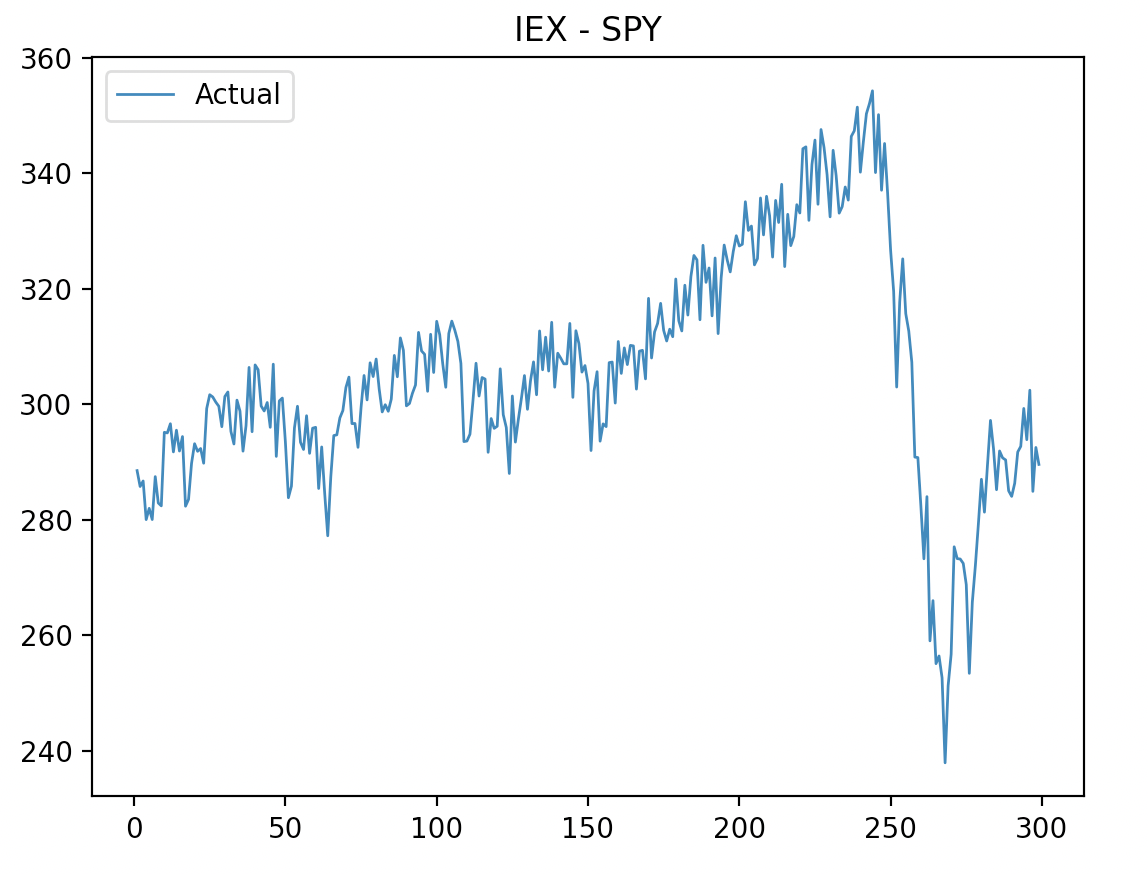

IEXCloud

You can open a free IEX cloud account https://iexcloud.io.

Install the following iex library:

pip3 install iexfinance

This code creates an IEXCloud-Daily-v0 which retrieves daily data of the stock symbol provided and the start and end dates. The environment also caches data for your in order to to reduce the amount of calls made to iex cloud.

Run examples/environments/using_iex_cloud.py

start = datetime(2019, 3, 1)

end = datetime(2020, 9, 4)

env = make('IEXCloud-Daily-v0',stock_symbol='SPY', iex_token = <your_iex_token_here>, iex_start=start, iex_end=end)

close = np.zeros(env.n_step)

obs = env.reset()

close[0] = obs[3]

while True:

obs, reward, done, info = env.step([1,1000])

close[env.cur_step] = obs[4]

if done:

print('total reward: {}'.format(info['cur_val']))

break

time = np.linspace(1, len(close), len(close))

plt.plot(time, close, label = 'Actual',linewidth=1.0)

plt.title('IEX - SPY')

plt.legend(loc = 'upper left')

plt.show()

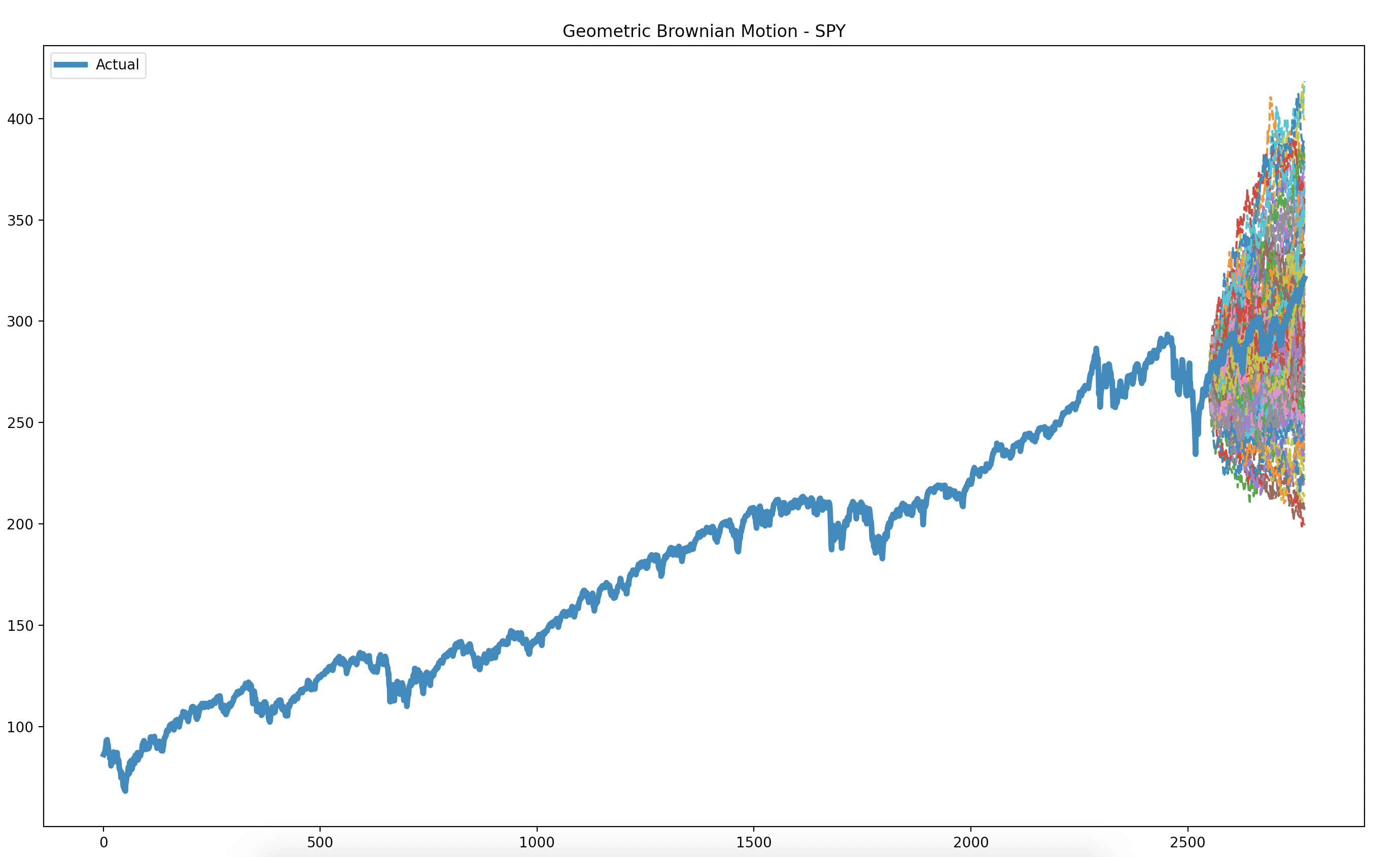

Random Walk

This code creates a SPY-Daily-Random-Walk environment and runs multiple random walks in order to predict possible outcomes of stock movements for the last year.

Run examples/environments/random_walk.py

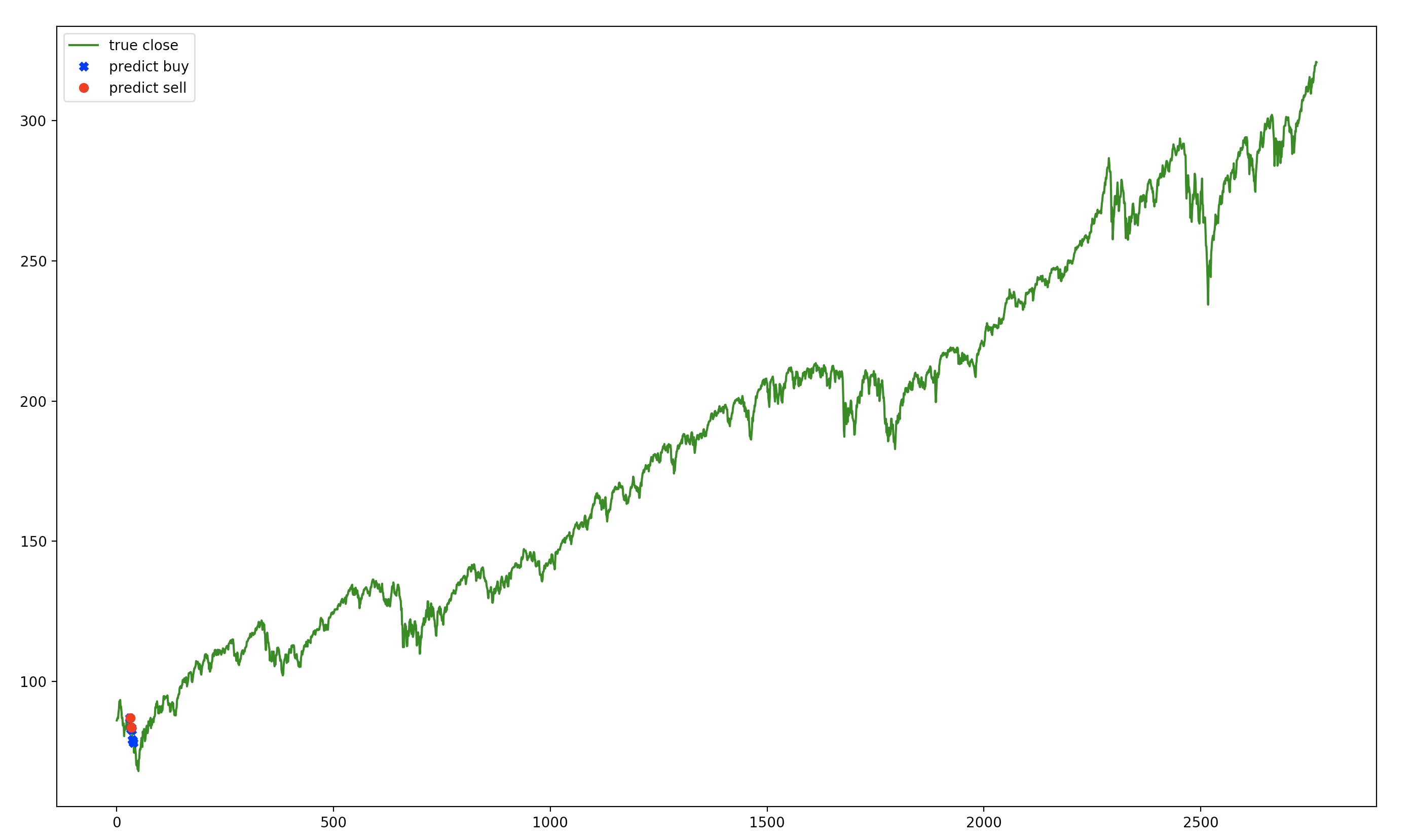

In our case, some agents that beat the buy and hold strategy learned to buy as much as stock in a dip early on and hold forever. Mimicking the buy and hold strategy and “learning” through many iterations which dip to buy.

See the graph below for our deep neuroevolution agent:

A popular strategy to estimate risk is the use of Monte Carlo simulation in order to predict the worst likely loss of a portfolio.

Fingym has random-walk environments that generate possible futures of stock movements. This allows you to create agents that learn from stochastic models. Using such technique can help create agents that are generalists and not tied down to a single path.

env = fingym.make('SPY-Daily-Random-Walk')

random_walks = []

for _ in range(100):

real_close = np.zeros(env.n_step)

random_walk = np.zeros(env.n_step)

obs = env.reset()

real_close[0] = obs[3]

random_walk[0] = obs[3]

while True:

obs, reward, done, info = env.step([0,0])

real_close[env.cur_step] = info['original_close']

random_walk[env.cur_step] = obs[3]

if done:

break

random_walks.append(random_walk)

time = np.linspace(1, len(random_walk), len(random_walk))

for random_walk in random_walks:

plt.plot(time, random_walk, ls = '--')

plt.plot(time, real_close, label = 'Actual',linewidth=4.0)

plt.title('Geometric Brownian Motion - SPY')

plt.legend(loc = 'upper left')

plt.show()

This results in 100 possible outcomes for the last year.